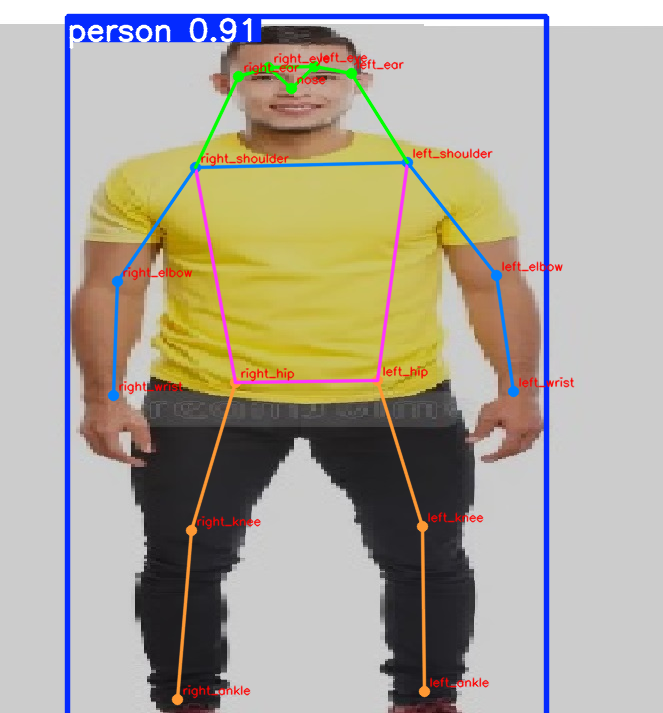

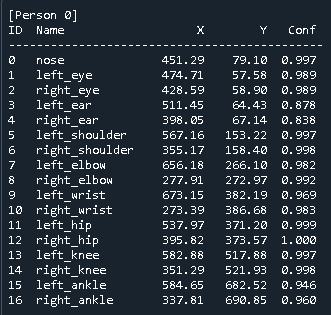

בינה מאלכותית RB14-13 : זיהוי איברים של אדם

https://docs.ultralytics.com/tasks/pose/

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

# -*- coding: utf-8 -*- """ YOLOv8-Pose: detect persons, draw skeleton, print keypoints in a table, save + show result with RED names drawn on the image (OpenCV window). """ import sys from pathlib import Path import cv2 import numpy as np from ultralytics import YOLO # ====================================================== # 2) Input and Output paths # ====================================================== image_path = r"d:\temp\person1.jpeg" output_path = r"d:\temp\person1_pose_outline.jpg" MODEL_WEIGHTS = "yolov8n-pose.pt" # Keypoint names in COCO order KP_NAMES = [ "nose", "left_eye", "right_eye", "left_ear", "right_ear", "left_shoulder", "right_shoulder", "left_elbow", "right_elbow", "left_wrist", "right_wrist", "left_hip", "right_hip", "left_knee", "right_knee", "left_ankle", "right_ankle" ] def main(): # Load model and image model = YOLO(MODEL_WEIGHTS) img = cv2.imread(image_path) if img is None: print(f"ERROR: Could not read {image_path}") sys.exit(1) results = model(img, verbose=False) res = results[0] if res.keypoints is None: print("No keypoints detected.") return xy = res.keypoints.xy.cpu().numpy() conf = res.keypoints.conf.cpu().numpy() if res.keypoints.conf is not None else None # === Print in table format === print("\n=== Keypoint Table ===") for pid in range(xy.shape[0]): print(f"\n[Person {pid}]") print(f"{'ID':<3} {'Name':<15} {'X':>8} {'Y':>8} {'Conf':>6}") print("-" * 45) for k, (xk, yk) in enumerate(xy[pid]): c = conf[pid][k] if conf is not None else 1.0 print(f"{k:<3} {KP_NAMES[k]:<15} {xk:8.2f} {yk:8.2f} {c:6.3f}") # === Visualization === plotted = res.plot() overlay = plotted.copy() # Add RED keypoint names on top of plotted image for pid in range(xy.shape[0]): for k, (xk, yk) in enumerate(xy[pid]): if conf is None or conf[pid][k] > 0.3: cv2.putText( overlay, KP_NAMES[k], (int(xk) + 5, int(yk) - 5), cv2.FONT_HERSHEY_SIMPLEX, 0.4, (0, 0, 255), # RED 1, cv2.LINE_AA ) # Save cv2.imwrite(output_path, overlay) print(f"\n[OK] Saved visualization with red names to {output_path}") # === Show in OpenCV Window === cv2.imshow("YOLOv8 Pose Output", overlay) cv2.waitKey(0) # waits for a key press cv2.destroyAllWindows() if __name__ == "__main__": main() |

loading a video

free video that can be download

pose video

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 |

# -*- coding: utf-8 -*- """ Video → YOLOv8-Pose per frame → keypoints to CSV/JSON. Also shows an annotated live window (ESC to quit). Outputs: - CSV: frame, person_id, kp_id, kp_name, x, y, conf - JSON: list of frames with persons and their keypoints """ import sys, csv, json, time from pathlib import Path import cv2 import numpy as np from ultralytics import YOLO # ================================ # Paths & settings # ================================ video_path = r"D:\temp\dancing2.mp4" csv_path = r"D:\temp\pose_keypoints.csv" json_path = r"D:\temp\pose_keypoints.json" MODEL_WEIGHTS = "yolov8n-pose.pt" # or yolov8x-pose.pt for better accuracy IMGSZ = 640 # inference size CONF_TH = 0.25 # detection confidence IOU_TH = 0.45 # NMS IOU DEVICE = None # None=auto, 0=GPU0, 'cpu'=force CPU SHOW = True # show live window DRAW_IDS = True # draw person IDs at nose SKIP = 0 # 0 = process all frames; 1 = every 2nd, 4 = every 5th, etc. # COCO keypoints order used by YOLOv8-Pose (17 points) KP_NAMES = [ "nose", "left_eye", "right_eye", "left_ear", "right_ear", "left_shoulder", "right_shoulder", "left_elbow", "right_elbow", "left_wrist", "right_wrist", "left_hip", "right_hip", "left_knee", "right_knee", "left_ankle", "right_ankle" ] def open_video(path: str): """Open a video with OpenCV; try FFMPEG backend first.""" cap = cv2.VideoCapture(path, cv2.CAP_FFMPEG) if not cap.isOpened(): cap = cv2.VideoCapture(path) return cap def main(): # --- open video --- cap = open_video(video_path) if not cap or not cap.isOpened(): print(f"[ERROR] Could not open video: {video_path}") sys.exit(1) # --- load model --- try: model = YOLO(MODEL_WEIGHTS) except Exception as e: print(f"[ERROR] Could not load model '{MODEL_WEIGHTS}': {e}") sys.exit(1) # --- prepare outputs --- out_dir = Path(csv_path).parent out_dir.mkdir(parents=True, exist_ok=True) csv_file = open(csv_path, "w", newline="", encoding="utf-8") csv_writer = csv.writer(csv_file) csv_writer.writerow(["frame", "person_id", "kp_id", "kp_name", "x", "y", "conf"]) json_frames = [] # list of {"frame": i, "persons": [ {id, keypoints:[...]} ]} # --- process loop --- frame_idx = 0 t0 = time.time() try: if SHOW: cv2.namedWindow("Pose Preview", cv2.WINDOW_NORMAL) while True: ok, frame = cap.read() if not ok: break if SKIP and (frame_idx % (SKIP + 1) != 0): frame_idx += 1 continue # --- inference --- results = model( frame, imgsz=IMGSZ, conf=CONF_TH, iou=IOU_TH, device=DEVICE, verbose=False ) res = results[0] frame_record = {"frame": frame_idx, "persons": []} if res.keypoints is not None and res.keypoints.xy is not None and len(res.keypoints.xy) > 0: xy = res.keypoints.xy.cpu().numpy() # (N,17,2) kpc = res.keypoints.conf.cpu().numpy() if res.keypoints.conf is not None else None # (N,17) # write CSV + JSON for pid in range(xy.shape[0]): person_entry = {"id": int(pid), "keypoints": []} for k in range(xy.shape[1]): xk, yk = float(xy[pid, k, 0]), float(xy[pid, k, 1]) ck = float(kpc[pid, k]) if kpc is not None else 1.0 csv_writer.writerow([frame_idx, pid, k, KP_NAMES[k], f"{xk:.2f}", f"{yk:.2f}", f"{ck:.3f}"]) person_entry["keypoints"].append({ "id": int(k), "name": KP_NAMES[k], "x": xk, "y": yk, "conf": ck }) frame_record["persons"].append(person_entry) # annotated preview if SHOW: plotted = res.plot() # draws skeleton & keypoints if DRAW_IDS: for pid in range(xy.shape[0]): x0, y0 = int(xy[pid, 0, 0]), int(xy[pid, 0, 1]) # nose cv2.putText(plotted, f"ID:{pid}", (x0 + 6, y0 - 6), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 1, cv2.LINE_AA) cv2.imshow("Pose Preview", plotted) if cv2.waitKey(1) & 0xFF == 27: # ESC to stop break else: # no detections in this frame if SHOW: cv2.imshow("Pose Preview", frame) if cv2.waitKey(1) & 0xFF == 27: break json_frames.append(frame_record) if frame_idx % 50 == 0: elapsed = time.time() - t0 print(f"[INFO] frame {frame_idx} elapsed {elapsed:.1f}s") frame_idx += 1 finally: cap.release() if SHOW: cv2.destroyAllWindows() csv_file.close() # --- save JSON --- with open(json_path, "w", encoding="utf-8") as f: json.dump(json_frames, f, ensure_ascii=False, indent=2) print(f"[OK] Wrote CSV to: {csv_path}") print(f"[OK] Wrote JSON to: {json_path}") print(f"[OK] Frames processed: {frame_idx}") if __name__ == "__main__": main() |

pose tracking :

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 |

# -*- coding: utf-8 -*- """ Video → YOLOv8-Pose (with tracking) → per-frame keypoints + angles - Live OpenCV window (ESC to quit) - Console table per frame (+ angles printed) - Saves: NumPy .npy (structured array), CSV, JSON """ import sys, csv, json, time, math from pathlib import Path import cv2 import numpy as np from ultralytics import YOLO # ================================ # Paths & settings # ================================ video_path = r"D:\temp\dancing1.mp4" out_base = Path(r"D:\temp\pose_tracking") out_base.mkdir(parents=True, exist_ok=True) csv_path = out_base / "pose_keypoints.csv" json_path = out_base / "pose_keypoints.json" npy_path = out_base / "pose_keypoints.npy" MODEL_WEIGHTS = "yolov8n-pose.pt" IMGSZ = 640 CONF_TH = 0.25 IOU_TH = 0.45 DEVICE = None SHOW = True WINDOW = "Pose Tracking" KP_CONF_VIS = 0.30 # COCO-17 keypoint names KP_NAMES = [ "nose","left_eye","right_eye","left_ear","right_ear", "left_shoulder","right_shoulder","left_elbow","right_elbow", "left_wrist","right_wrist","left_hip","right_hip", "left_knee","right_knee","left_ankle","right_ankle" ] NOSE, LEYE, REYE, LEAR, REAR, LSHO, RSHO, LELB, RELB, LWR, RWR, LHIP, RHIP, LKNEE, RKNEE, LANK, RANK = range(17) ROW_DTYPE = np.dtype([ ("frame","i4"), ("person","i4"), ("kp_id","i4"), ("kp_name","U16"), ("x","f4"), ("y","f4"), ("conf","f4"), ("left_knee","f4"), ("right_knee","f4"), ("left_elbow","f4"), ("right_elbow","f4"), ("left_hip","f4"), ("right_hip","f4"), ("head_yaw_deg","f4"), ("head_pitch_proxy_deg","f4") ]) def open_video(path: str): cap = cv2.VideoCapture(path, cv2.CAP_FFMPEG) if not cap.isOpened(): cap = cv2.VideoCapture(path) return cap # ---------- geometry helpers ---------- def _angle(A, B, C): BA = A - B; BC = C - B na = np.linalg.norm(BA); nc = np.linalg.norm(BC) if na < 1e-6 or nc < 1e-6: return float("nan") cosv = np.clip(float(np.dot(BA, BC) / (na * nc)), -1.0, 1.0) return math.degrees(math.acos(cosv)) def _perp(v): return np.array([-v[1], v[0]], dtype=np.float32) def head_yaw_pitch_proxy(xy): L, R = xy[LEYE], xy[REYE] if np.linalg.norm(R - L) < 1e-6: return float("nan"), float("nan") E = 0.5 * (L + R); v_eye = R - L; m_face = _perp(v_eye); u = xy[NOSE] - E yaw = math.degrees(math.atan2(abs(u[0]*m_face[1]-u[1]*m_face[0]), float(u[0]*m_face[0]+u[1]*m_face[1]))) yaw *= 1.0 if (u[0]*v_eye[0] + u[1]*v_eye[1]) >= 0 else -1.0 LS, RS = xy[LSHO], xy[RSHO] if np.linalg.norm(RS - LS) < 1e-6: return yaw, float("nan") S = 0.5 * (LS + RS); v_sho = RS - LS; m_torso = _perp(v_sho); u2 = xy[NOSE] - S pitch = math.degrees(math.atan2(abs(u2[0]*m_torso[1]-u2[1]*m_torso[0]), float(u2[0]*m_torso[0]+u2[1]*m_torso[1]))) pitch *= 1.0 if (u2[0]*v_sho[0] + u2[1]*v_sho[1]) >= 0 else -1.0 return yaw, pitch def compute_angles(xy): return { "left_knee": _angle(xy[LHIP], xy[LKNEE], xy[LANK]), "right_knee": _angle(xy[RHIP], xy[RKNEE], xy[RANK]), "left_elbow": _angle(xy[LSHO], xy[LELB], xy[LWR]), "right_elbow":_angle(xy[RSHO], xy[RELB], xy[RWR]), "left_hip": _angle(xy[LSHO], xy[LHIP], xy[LKNEE]), "right_hip": _angle(xy[RSHO], xy[RHIP], xy[RKNEE]), } def main(): cap = open_video(video_path) if not cap.isOpened(): print(f"[ERROR] Could not open: {video_path}") sys.exit(1) try: model = YOLO(MODEL_WEIGHTS) except Exception as e: print(f"[ERROR] load model: {e}") sys.exit(1) csv_f = open(csv_path, "w", newline="", encoding="utf-8") csv_w = csv.writer(csv_f) csv_w.writerow([ "frame","person","kp_id","kp_name","x","y","conf", "left_knee","right_knee","left_elbow","right_elbow", "left_hip","right_hip","head_yaw_deg","head_pitch_proxy_deg" ]) json_frames = [] rows = [] if SHOW: cv2.namedWindow(WINDOW, cv2.WINDOW_NORMAL) cv2.resizeWindow(WINDOW, 1024, 576) frame_idx = 0 t0 = time.time() try: while True: ok, frame = cap.read() if not ok: break results = model.track( frame, imgsz=IMGSZ, conf=CONF_TH, iou=IOU_TH, tracker="bytetrack.yaml", persist=True, device=DEVICE, verbose=False ) res = results[0] print(f"\n=== Frame {frame_idx} ===") frame_record = {"frame": frame_idx, "persons": []} if res.keypoints is not None and res.keypoints.xy is not None and len(res.keypoints.xy) > 0: xy_all = res.keypoints.xy.cpu().numpy().astype(np.float32) kc_all = res.keypoints.conf.cpu().numpy().astype(np.float32) if res.keypoints.conf is not None else None ids = res.boxes.id.cpu().numpy().astype(int) if (res.boxes is not None and res.boxes.id is not None) else np.arange(xy_all.shape[0], dtype=int) plotted = res.plot() if SHOW else None for i in range(xy_all.shape[0]): pid = int(ids[i]) xy = xy_all[i] confs = kc_all[i] if kc_all is not None else np.ones((17,), dtype=np.float32) angles = compute_angles(xy) yaw_deg, pitch_deg = head_yaw_pitch_proxy(xy) # --- KEYPOINT TABLE PRINT --- print(f"[Person {pid}] ID Name X Y Conf") print("---------------------------------------------------------") person_entry = {"id": pid, "keypoints": [], "angles": {**angles, "head_yaw_deg": yaw_deg, "head_pitch_proxy_deg": pitch_deg}} for k in range(17): xk, yk = float(xy[k,0]), float(xy[k,1]) ck = float(confs[k]) name = KP_NAMES[k] print(f"{k:<3} {name:<16} {xk:8.2f} {yk:8.2f} {ck:6.3f}") rows.append(( frame_idx, pid, k, name, xk, yk, ck, angles["left_knee"], angles["right_knee"], angles["left_elbow"], angles["right_elbow"], angles["left_hip"], angles["right_hip"], yaw_deg, pitch_deg )) csv_w.writerow([ frame_idx, pid, k, name, f"{xk:.2f}", f"{yk:.2f}", f"{ck:.3f}", f"{angles['left_knee']:.2f}", f"{angles['right_knee']:.2f}", f"{angles['left_elbow']:.2f}", f"{angles['right_elbow']:.2f}", f"{angles['left_hip']:.2f}", f"{angles['right_hip']:.2f}", f"{yaw_deg:.2f}", f"{pitch_deg:.2f}" ]) person_entry["keypoints"].append({"id": k, "name": name, "x": xk, "y": yk, "conf": ck}) # --- ANGLES PRINT --- print("Angles (deg): " f"Lknee={angles['left_knee']:.1f}, Rknee={angles['right_knee']:.1f}, " f"Lelbow={angles['left_elbow']:.1f}, Relbow={angles['right_elbow']:.1f}, " f"Lhip={angles['left_hip']:.1f}, Rhip={angles['right_hip']:.1f}, " f"HeadYaw={yaw_deg:.1f}, HeadPitchProxy={pitch_deg:.1f}") frame_record["persons"].append(person_entry) if SHOW and plotted is not None: x0, y0 = int(xy[NOSE,0]), int(xy[NOSE,1]) cv2.putText(plotted, f"ID:{pid}", (x0+6, y0-8), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0,0,255), 2, cv2.LINE_AA) for k in range(17): if confs[k] >= KP_CONF_VIS: tx, ty = int(xy[k,0]) + 5, int(xy[k,1]) - 5 cv2.putText(plotted, KP_NAMES[k], (tx, ty), cv2.FONT_HERSHEY_SIMPLEX, 0.45, (0,0,255), 1, cv2.LINE_AA) if SHOW and plotted is not None: cv2.imshow(WINDOW, plotted) if cv2.waitKey(1) & 0xFF == 27: break else: print("[INFO] no detections") if SHOW: cv2.imshow(WINDOW, frame) if cv2.waitKey(1) & 0xFF == 27: break json_frames.append(frame_record) if frame_idx % 50 == 0 and frame_idx > 0: print(f"[INFO] frame {frame_idx} elapsed {time.time()-t0:.1f}s") frame_idx += 1 finally: cap.release() csv_f.close() if SHOW: cv2.destroyAllWindows() with open(json_path, "w", encoding="utf-8") as f: json.dump(json_frames, f, ensure_ascii=False, indent=2) kp_array = np.array(rows, dtype=ROW_DTYPE) np.save(npy_path, kp_array) print(f"\n[OK] CSV -> {csv_path}") print(f"[OK] JSON -> {json_path}") print(f"[OK] NPY -> {npy_path}") print(f"[OK] Total rows: {kp_array.shape[0]} across {frame_idx} frames") if __name__ == "__main__": main() |